Adam Allevato

adam (at) allevato (dot) me

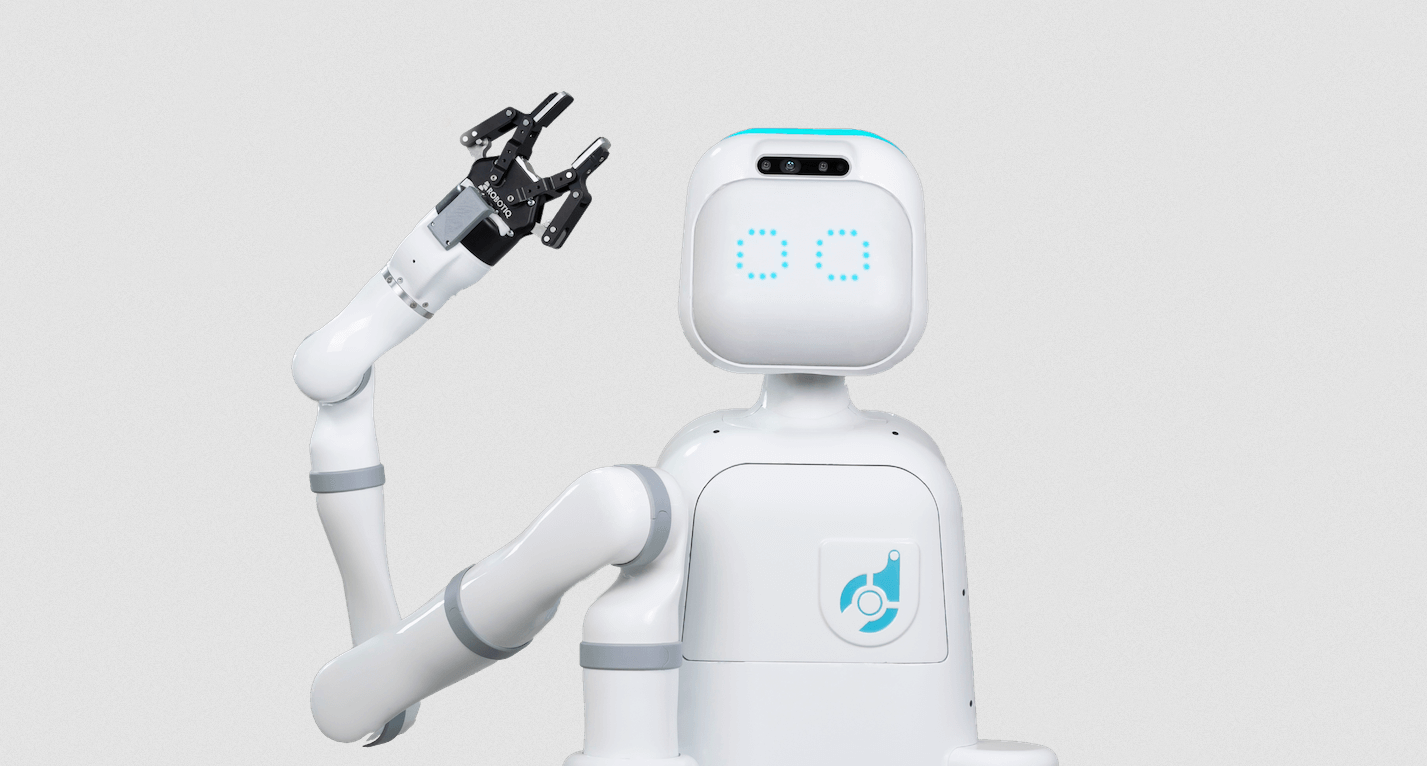

Autonomy for Indoor Deliveries

2018, 2021-Present

Moxi is Diligent Robotics' hospital delivery robot. I've led the work on most of Moxi's ML and classical perception capabilities that enable it to navigate hospitals, open doors, and ride elevators autonomously. I also built Moxi's "meep" sounds along with many other systems across the robot.

See pressAccurate Flying Inside Retail Stores

2020-2021

I redesigned the core behavior state machine for Pensa's autonomous store scanning drone, Daisy, making it more robust and easier to extend. I then built on this to prove out the first fully-autonomous multi-flight schedule for the drone. I also developed a custom fiducial marker-based visual localization system for precise indoor navigation in GPS-denied retail settings. The result was improved scan consistency, reduced drift, and better shelf coverage.

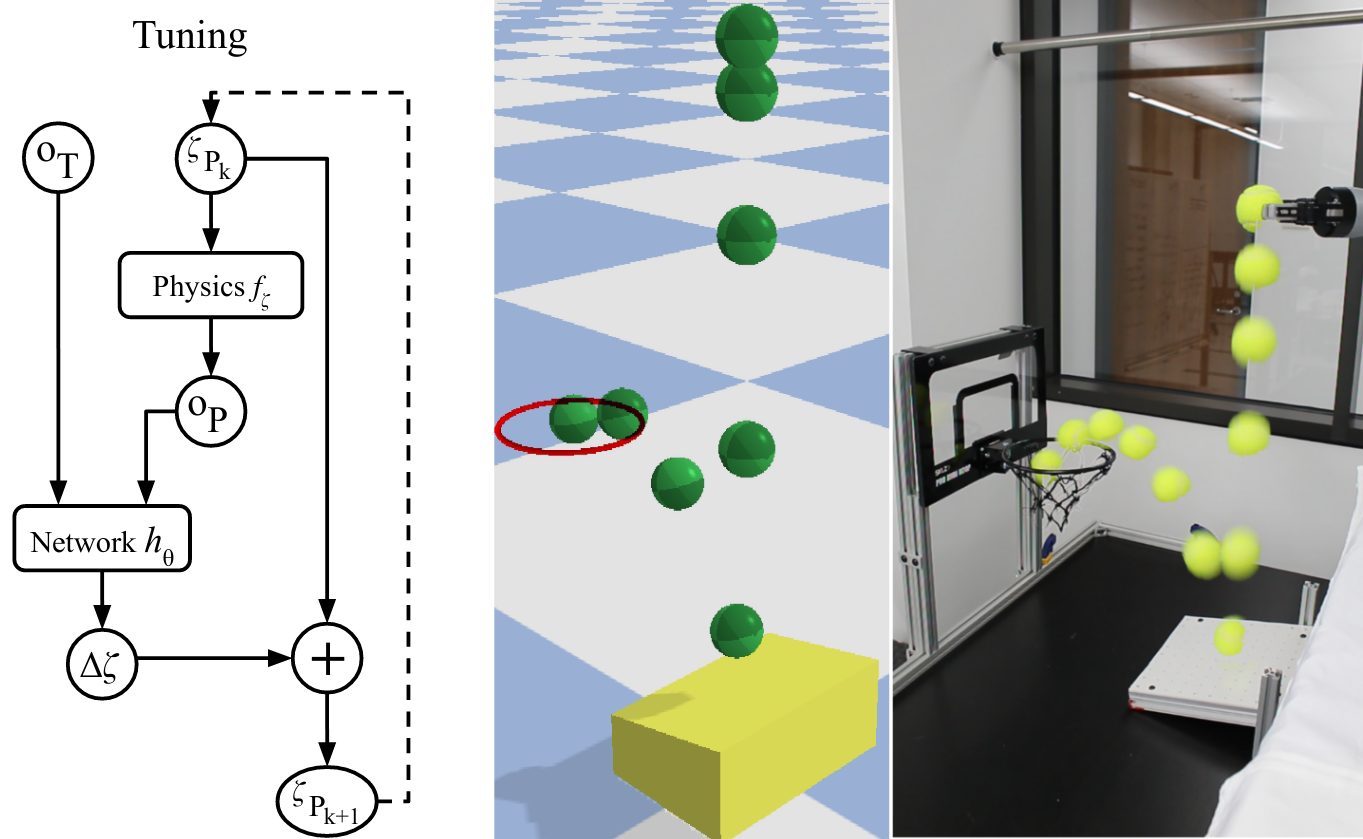

Sim-to-Real Deep Learning

2016-2020

My PhD research at The University of Texas at Austin trained robot task models using simulations and deep learning, with a goal of better human-robot interaction.

Show publications

Conference paper, CoRL 2019

PDF | bibtex | video | code

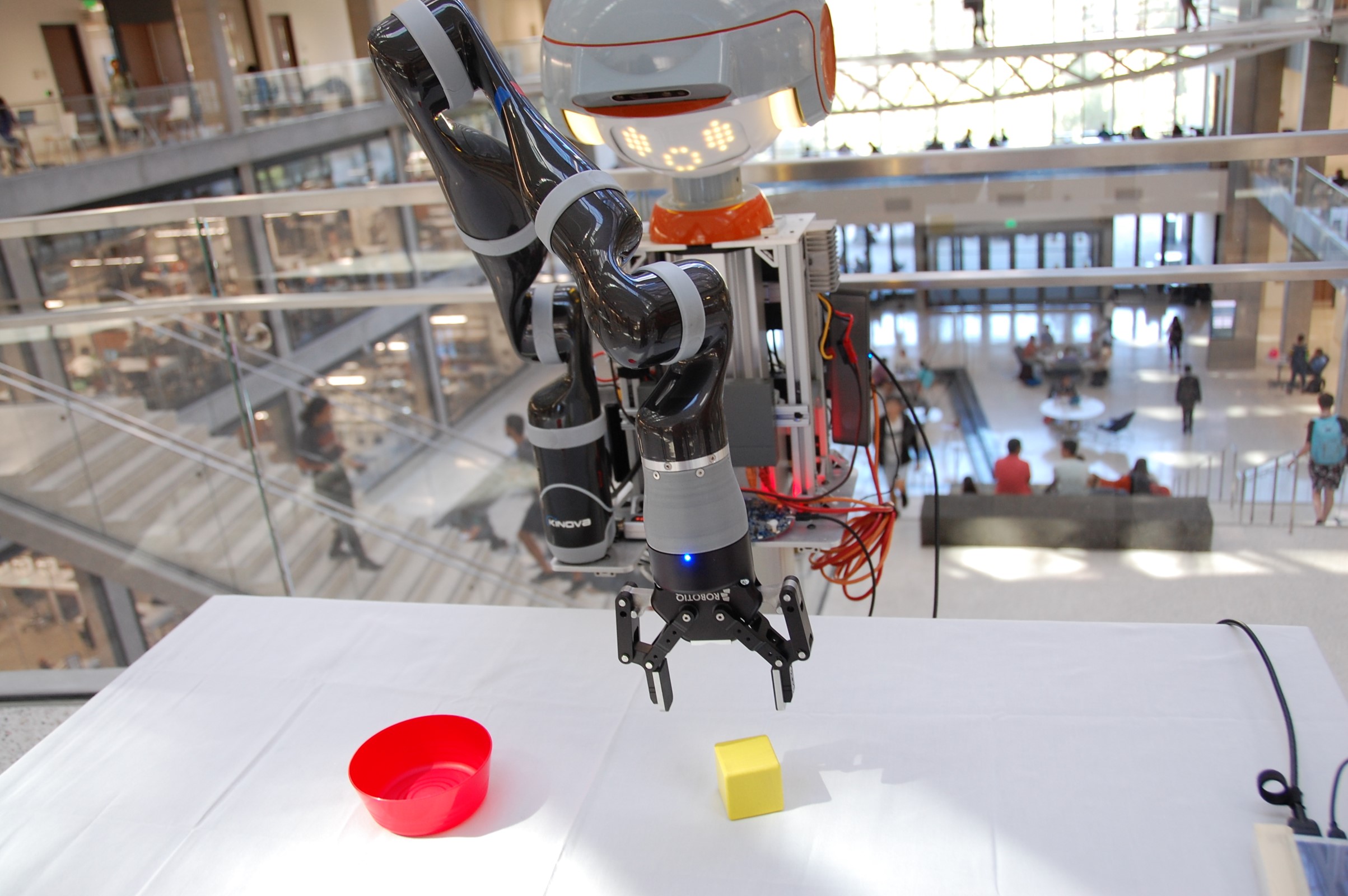

Robots in Nuclear Gloveboxes

2016-2018

Working with the Nuclear and Applied Robotics Group and Los Alamos National Laboratory, we deployed robots inside nuclear gloveboxes for mixed-waste sorting and manufacturing tasks. Much of the code is available on the lab's GitHub, which I helped run.

Show publications

3D Computer Vision for Robots

2014-2017

In my masters' thesis work, I developed a 3D pose estimation library based on Robot Operating System (ROS). It's great for robotic sorting, remote inspection, and small part picking. We've since used the code in the 2015 Amazon Picking Challenge and other human-robot interaction studies.