What I learned building WhyTorch

04 Oct 2025 by Adam Allevato

I just finished V1 of WhyTorch, a visual explainer for basic PyTorch functions. It’s fully open source and MIT licensed, too.

Why I built it

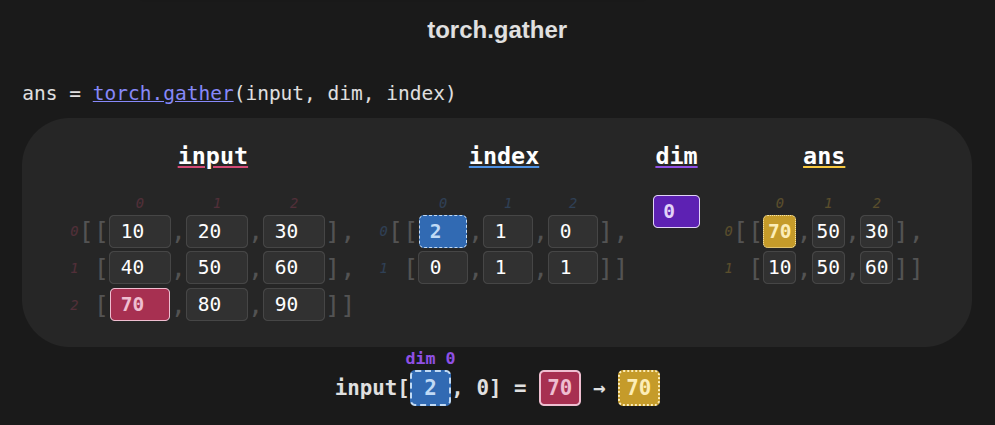

I built WhyTorch specifically because the function torch.gather was unclear to me even after re-reading the documentation a few times. Getting out pen and paper finally gave me an intuition for how the function worked, and I had the idea of visually showing how the elements in the different function arguments work together to produce each result.

After some planning, the specific goals of the project were:

- Useful: Be a learning tool to anyone who is familiar with PyTorch indexing

- Allow jumping straight from PyTorch documentation to WhyTorch (that informed the name)

- Detailed: Show how the outputs of PyTorch functions are derived from the inputs

- Clear: Display an explanation for every single output element

- Fun to work on: Ship a small project that was not subject to the whims of hardware breaking (robots are hard)

How I built it

First attempt: Python

I started with Python and htmx, with the intention of having the outputs actually be calculated via calling PyTorch functions directly. Taking this approach conflicted with several goals, so I abandoned it quickly:

- Front-end interactivity would be much simpler with a chunk of client-side JavaScript

- Every time someone interacted with the UI, I’d have to serve another request to recalculate the result

- It wasn’t clear how to mark elements of tensors as being related to each other, which was a key part of my “detailed” goal.

Starting over

I started over in vanilla JavaScript and CSS. After a quick AI-assisted proof of concept, I designed the Tensor and TensorItem class first. A key feature I added was that TensorItems can be “related” to other TensorItems. This allows an item, when selected by the user, to traverse its relations recursively and find out how it is used across the entire function. This is then used in the “equation” display and highlighting features in the UI.

That was enough to start implementing simple functions, and as I added more complex functions, I added features to the engine and refactored as needed.

Implementing tensors

I kept things “simple” in the sense that I didn’t model a full tensor with striding, arbitrary number of dimensions, and more. I only need up to 2 dimensions. I also know that I always want to be visualizing the tensors, so tensors effectively generate their own display HTML.

The implementation I have works for the needs of the site. I realized I could have put more tensor logic into the TensorItem and Tensor classes themselves to make future functions easier. For example, if TensorItem supported “operator overloads” (even if just defined as functions), then relations could be automatically built up and used in equation display. As it is right now, each function has to set up its own bespoke equation display and assign relations between input tensor items and the items in the output tensor(s).

The structure I chose naturally allowed for extension. For example, I did not originally plan for users to be able to change the values in the tensors, but this turned out to be quite simple to implement. That “natural” extension caused scope creep - allowing changing the dim (dimension) variable required me to generalize a lot of functions to work for different dimensions - but the result was well worth it.

An aside on deployment

I tried a couple of new (for me) technologies for deploying the site - 11ty (https://www.11ty.dev/) for static site generation, and Fly.io (https://fly.io/) for hosting. I'll stick with 11ty indefinitely; I actually prefer it to Jekyll (which is what my personal site uses). I used Fly.io to handle HTTPS and hopefully load-scale if the site gets popular, and it is really easy to use. I do think it's overkill and a premature optimization, and I'm considering just dumping WhyTorch on shared hosting via FTP. Deploying from terminal sure is nice though!What I learned

I now understand very well how torch.gather and torch.scatter work, and more generally understand more tensor indexing operations. I learned about some functions and features I didn’t know PyTorch had, like the ability for torch.triu to give a smaller or larger triangle of value (check out the WhyTorch visualization). I also picked up 11ty and Fly.io as frameworks.

More fundamentally, I have a deeper understanding of striding and tensor dimensioning. I read tensor implementations as a reference and seeing how these concepts interact with each other to elegantly implement different functions and slicing operations was a fun adventure.

On the implementation side, there are two main things I’d do differently if I was starting over or revisiting the project:

- Refactor the generation of HTML from tensors to be less verbose

- Switch to a more modular JS structure so that more code can be reused between functions

What’s next

There are a lot more functions to implement.

WhyTorch currently explains just a subset of the 400 or so “basic” PyTorch functions, that is, functions that are callable via torch.<function>(...). Some of these don’t make sense to visualize in WhyTorch, but there are still dozens more I’d like to add. An example is torch.einsum, which is an extremely powerful and useful function. While I understand it well, it would be a lot of work to reimplement and present a useful explanation on the screen so I decided to save it for future work.

Other features I’d like to add:

- A quiz feature, where the website would provide multiple-choice questions to help you train your understanding. I soon realized that the most useful part of the site was open-ended exploration, so a quiz could come later

- Allow users to change the shape of tensors (add/remove items/dims), not just modify values

I’m hoping that if the site proves popular, I’ll have more of a reason to add more functions and features - or even better, that others will help me implement them by contributing. Even if no one contributes, I hope that what I’ve built serves as a useful tool for learning.